Machine Learning and Artificial Intelligence (AI) is the future. It is already used in numerous industries such as Healthcare, vehicles and Cyber Security to name just a few.

In this blog we will explore how Machine Learning and AI can be used in one of the most popular forms of art - music. We will explore some of the most recent AI music projects and see how it has evolved and compares to music made by humans.

First Known use

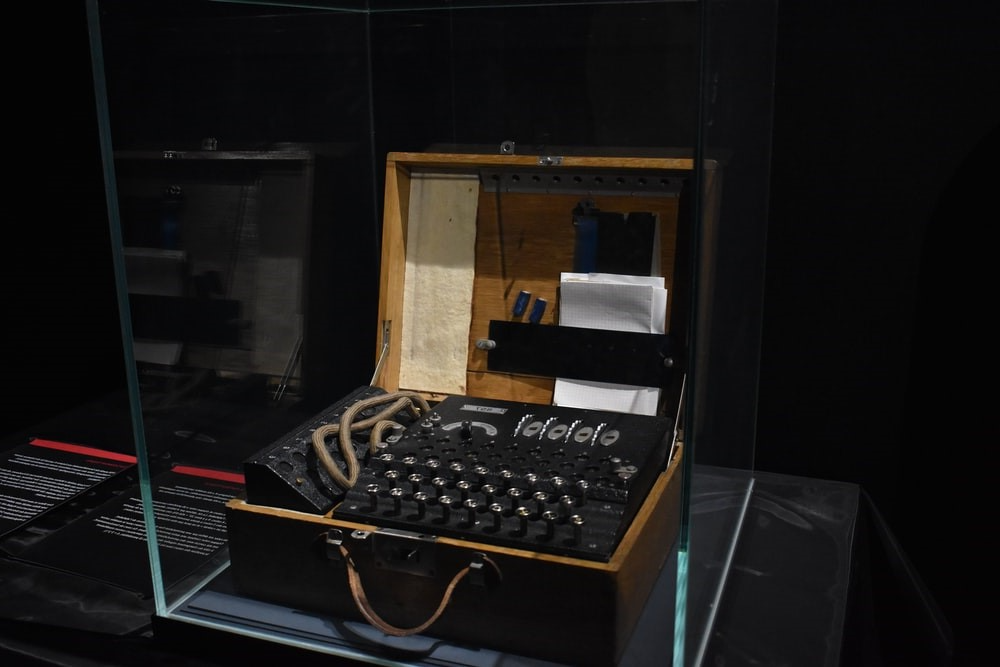

The first-time computer-generated music was created was in 1951 by computer scientist Alan Turing who was most famously known for deciphering the Nazi's enigma code during World War 2.

The music he created was in Manchester at the Computing Machine Laboratory and in his recording, there were three melodies: The National Anthem, 'Baa, Baa, Black Sheep' and Glenn Miller's 'In the Mood'.

You can hear the recording for yourself here.

Whilst the recording itself was recognisably music, it was very distorted due to its age and likely does not fully depict how it sounded from the computer.

Despite Alan Turing being the first to create musical notes, he was not particularly interested in creating music. However, his work would have initially paved the way for some much more innovative concepts.

(Read the full story about Alan Turing and his music here: First recording of computer-generated music – created by Alan Turing – restored | Alan Turing | The Guardian)

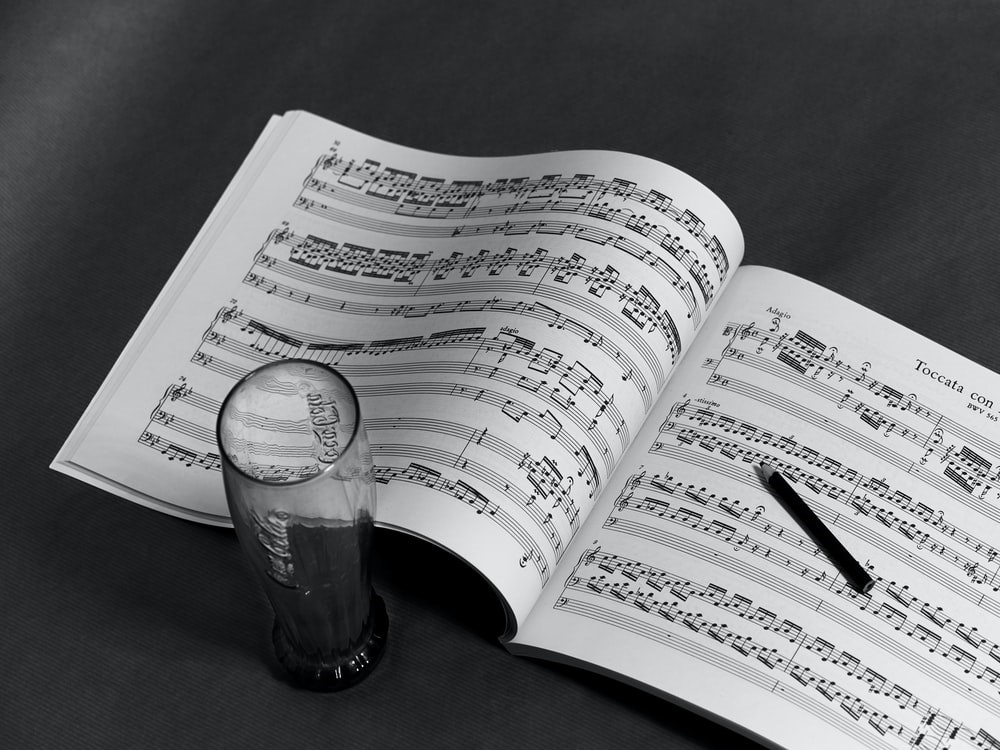

Google Doodle Johann Sebastian Bach

In a more modern-day application, a Google Doodle was created to celebrate Johann Sebastian Bach's 333rd Birthday. This Google Doodle allows you to input piano notes and the Doodle then uses Machine Learning to provide notes which harmonise with the notes you provided.

To make this all possible a machine learning model named Coconet is used. A dataset of 306 chorale harmonisations composed by Bach have been used to train Coconet to create new harmonisations in the style of Bach.

The way that the model has been trained is by taking a sample of Bach’s music, then some of the notes are randomly removed and Coconet is then left to guess the missing notes. This process is then re-iterated and re-written until the best notes for harmonising are used.

(You can try this out for yourself here and find about more about the process of its creation here: Celebrating Johann Sebastian Bach (google.com))

Dadabots Generates Heavy Metal Music

Dadabots are a team of musicians/computer scientists that have created an AI algorithm to create heavy metal music in the style of existing heavy metal bands. Their album music is created by the AI system SampleRNN.

SampleRNN is a hierarchical Long Short-Term Memory (LSTM) network and these types of networks can be used to predict new interactions of sequences of patterns. SampleRNN could be used to predict patterns on sequences of just about anything such as text, number or even the temperature outside but in Databots’ case they predict raw acoustic waveforms of popular metal albums.

SampleRNN will listen to the music and try and guess the next part of the music and this is done in granular detail of less than a millisecond. This process is iterated millions of times until it has created multiple hours of music. These hours of music are then processed using another tool and the best parts are then chosen to be produced into an album.

(Find more out about who Dadabots are here: https://dadabots.com)

Daddy's Car

The last project I will be discussing is the song “Daddy’s Car”, a song created by Sony CSL (Computer Science Laboratories) using AI. The song Daddy’s Car was produced in the style of The Beatles and can be listened to here.

Sony CSL used the technology Flow Machines to create the music. This AI system requires a library of existing music that is a similar style to the desired song to be created.

After analysing the music library, lead-sheets are generated based on the songs provided. The team creating the music then can choose the melodies they want included in the song and then use a technology Mashmelo to map a singer’s voice onto the generated lead-sheet and creates vocals that are in the style and soul of the lead-sheet.

Once all the elements of the music are created, they can be combined to form the song Daddy’s Car.

(Visit this Sony CSL video to find out more: Flow Composer: how does it work? - YouTube)

Summary

Whilst AI in music is an excellent concept and I found myself enjoying the song Daddy’s Car as well as some of the work created by Databots, it is still early days, and the true heart and soul of music is very hard to imitate.

In the future, I would like to see AI accompany human produced music to enhance overall sound of the music as this produces exciting opportunities for innovative music. Given that music is constantly evolving, this is certainly something some musicians will want to take advantage of.