Recently I was working with a client to build an Azure analytics solution, replacing an existing on-premises SQL Server implementation. The scope of the project was to transform and model data from XML files and present the output through Power BI.

The solution designed was:

- Azure Data Lake Storage (ADLS) stores the XML files

- Azure SQL Database stores the transformed data, to which Power BI connects

- Azure Data Factory (ADF) orchestrates the extract, transform and load (ETL) process

The Challenge

The challenge that presented itself was moving the data from the XML files to the Azure SQL. ADF does not support XML as a file type, yet. (Read on for some exciting news from Microsoft about supported file types). Adding to the complexity was the fact that XML files were potentially 200MB and contained nested structures and arrays up to 8 levels deep.

We explored using the following approaches to overcome this challenge:

- Logic Apps

- Azure Batch

- Azure Databricks

A Logic App could convert the XML into a supported file type such as JSON. However, the complex structure of the files meant that ADF could not process the JSON file correctly.

Either Azure Batch or Azure Databricks could have been used to create routines that transform the XML data, and both are executable via ADF activities. Our client had a requirement to manage and modify the solution independently. Therefore we decided against introducing extra Azure services instead using a service that was already part of the solution and aligned to their existing skill sets.

Our Solution

Azure SQL Database provides both support for manipulating semi-structured data such as XML, and the ability to connect to external data sources such as files. Using a combination of these features we were able to load the data from the XML files into an Azure SQL Database. By using stored procedures, the relevant code could be executed directly from ADF.

Firstly, in Azure SQL Database, we created an external data source to ADLS. We used the OPENROWSET command in T-SQL to connect to individual XML files via the external data source and insert the data into a staging table as XML. Finally, with the data staged, the XML can be transformed using nodes() method.

The steps we followed to achieve this were:

- Create a database master key.

SQL Server uses database master keys for the management of other keys. If your database already has a master key, skip this step.

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '&MyStr0ngP@$$w0rd';

- Create a database scoped credential.

A database scoped credential is the credential that the database uses to access external locations, in our case ADLS. When accessing blob storage accounts, a shared access signature (SAS) token, for the storage account, must be generated and used as the identity.

CREATE DATABASE SCOPED CREDENTIAL ExampleCredential WITH IDENTITY = 'SHARED ACCESS SIGNATURE', SECRET = 'SAS Token with leading ? removed here';

- Create an external data source.

Using the previously created credential, we connect to the ADLS putting using the ADLS endpoint URL into the location parameter.

CREATE EXTERNAL DATA SOURCE ExampleDataSource

WITH (

TYPE = BLOB_STORAGE,

LOCATION = 'https://MyDataLake.blob.core.windows.net',

CREDENTIAL = ExampleCredential

);

- Use the external data source to bulk load XML data into a staging table.

The external data source created in the previous step points to the root directory of the ADLS. Therefore, we must provide the full path of the data file to the BULK argument.

CREATE TABLE dbo.StagingTable ( XMLData XML NOT NULL ); INSERT INTO dbo.StagingTable (XMLData) SELECT BulkColumn FROM OPENROWSET ( BULK 'MyContainer/MyFolderPath/MyXMLFile.xml' ,SINGLE_BLOB ,DATA_SOURCE = 'ExampleDataSource' ) AS DataFile;

- Once loaded into a table T-SQL is used to query and manipulate the XML. A simple select statement will return the XML.

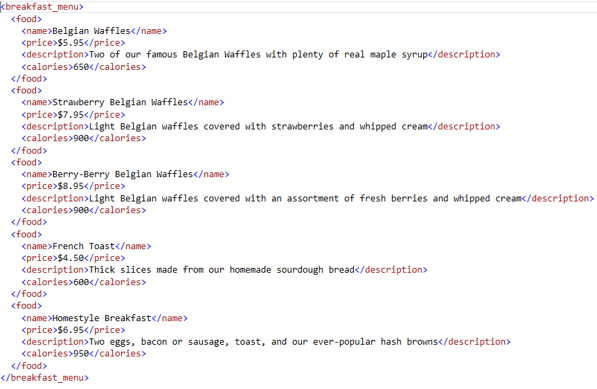

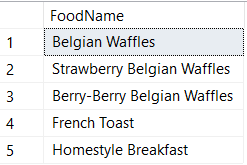

Using the OUTER APPLY function and Nodes() method, we can write more complicated queries such as returning the name of all the food items from the breakfast menu.

SELECT f.XMLData.value('(name/text())[1]','varchar(100)') AS FoodName

FROM [dbo].[StagingTable] t

OUTER APPLY t.XMLData.nodes('/breakfast_menu') AS bm(XMLData)

OUTER APPLY bm.XMLData.nodes('food') AS f(XMLData)

Using some dynamic SQL to parameterise the process, enabling multiple files to be loaded, we implemented steps 4 and 5 as stored procedures that could be executed directly from our ADF pipelines.

Updates from Microsoft

Now, as promised earlier, some exciting news from Microsoft. Recently on the ADF forums, the idea to include support for XML file types as a data source has been updated to have a status of 'started'. In the coming months, we will be able to connect to XML files and imported them to Data Lakes or Databases using the copy activity and mapping data flows. Progress updates, as and when they happen can be found here.

Similarly, find updates for an even more sought-after file type here. ADF may soon have a connector to Microsoft Excel!

Update 18/06/2020: Microsoft Excel is now a supported file type for data sets in ADF.

Update 26/10/2020: As of July 2020 XML is now a supported file type in ADF