Pet servers are those servers you named, nurtured (loved?). Cattle servers are disposable, numbers only. When they are sick they are “…taken out back, shot, and replaced…”. Containers offer a new way to think about the services we’re delivering – processing our cattle-like servers into something we consume – mince.

What are containers?

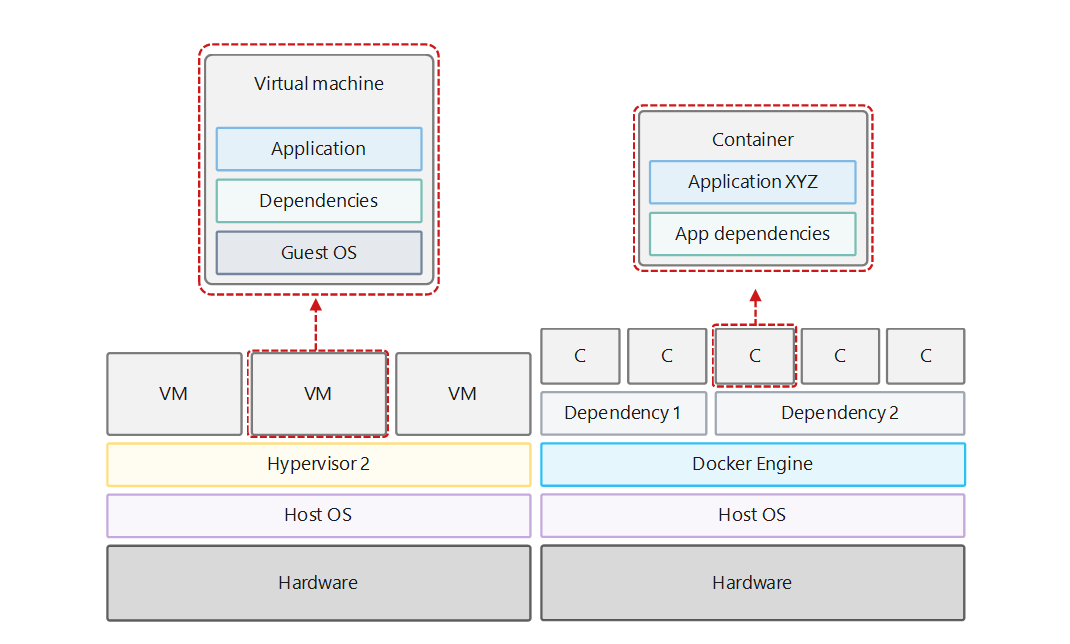

Containers offer a new option to abstract details of the OS and hardware, offering a lighter weight method of virtualization. While virtualization has been focused on replacing near like-for-like everything we were familiar with from physical servers – the operating system, storage, networking – containers offer continuation of this approach, using a container engine to host many containers each providing all the dependencies your app needs, replacing the hypervisor & guest OS and running direct on the host OS. The smaller footprint required by each container offers potential for greater compute density and new scaling options. Provisioning a new container from an image can be done very quickly, as can deprovisioning. The containers trend works really well for stateless workloads, although there can be benefits for relational database workloads too.

When you start looking into server containerization you will quickly see the same names coming up: Docker & Kubernetes.

Docker

While there are other runtimes available, Docker has risen to the top to become a de facto standard for container runtimes. Built according to the Open Container Initiative (OCI) – which Docker helped define – the runtime serves to ‘containerize’ the application you’re running, transforming the container image you’ve created into the libraries & environment variables needed to run your workload, as well as the isolation from other containers on the same host, and from the host’s resources.

The Docker ecosystem also offers other tools around containers, allowing for orchestration, but the docker engine is the jewel.

Kubernetes

While Docker offers the runtime used for the containers, Kubernetes offers a way to orchestrate and manage those containers. Kubernetes is an open source tool, developed at Google to manage the deployment and scaling of containers as well as managing storage and networking for the containers. Using Kubernetes, you can deploy containers across a cluster of servers, offering you HA options and burstability for workloads that support it.

Virtualization vs Containerization

How do you deploy containers?

While you could go the route of deploying Docker & Kubernetes on a server for yourself, that doesn’t seem to be really in the spirit of things. Azure offers 2 PaaS options to achieve the same end:

- Azure Container Instance (ACI)

- Azure Kubernetes Service (AKS)

ACI

ACI allows you to quickly run single node deployments rather than the clustered deployments support by AKS. While this removes some of the complexity (and cost) associated, there is a loss of control over HA and scaling. With ACI being a single node model, pricing is based on how long the container is running (on a per second basis), allowing for tight control over costs.

AKS

Rather than the single node deployments used by ACI, AKS offers the ability to spin up a multi-node cluster.

While it appears at a glance like any other PaaS service, behind the scenes (and not very far behind them either), it's really just an ARM template deploying a set of load-balanced VMs and associated resources to an additional resource group.

This model has its advantages though, allowing you to deallocate the VMs to help control your costs dramatically when not using it. It's a real peek behind the curtain at how some of the other PaaS options might be operating in the background.

Why use containers?

A great use case for containers with organisations running databases on SQL Server for Continuous Integration (CI) and Continuous Deployment (CD) workflows. Using a disposable database container for development & testing allows for rapidly provisioning the environment, with the use of an image as the source ensuring consistency across environments and deployments.