Maintaining database backups is an essential part of most DBA's or Sys Admin's job descriptions, yet it's common to find deployments of larger databases where the time taken to run a full backup means that they are not being taken as regularly as possible. Using differential backups is all well and good but for some databases they are almost as large as a full backup, especially after a 3 or 4 days. In this post I will run through some optimisation tips that may help you keep backup and verification times to a minimum.

- Ensure you are backing up to a separate disk or set of disks than those where the database lives - this actually vital to ensuring a single failure does not wipe out data and the most recent backup and you should check you really are using separate disks, not just a different logical drive on the same physical disks or RAID array.

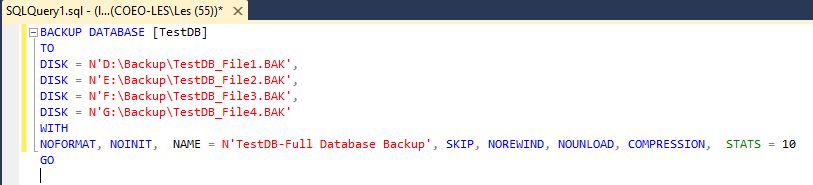

- If you have it, use backup compression - it has been available in Enterprise Edition since SQL Server 2008 and Standard Edition since SQL Server 2012 but is disabled by default.

- Backup jobs should have two steps - the actual backup, followed by a backup file verification; which benefits from compression more than the actual backup process.

In order to illustrate the difference these make I performed some tests with different conditions to demonstrate how the backup and verify duration of even a small database can be optimised. These tests were carried out on my laptop with extra storage provided by external USB 3.0 devices.

| CPU | RAM | Drive 1 | Drive 2 | Drive 3 | SSD |

| Core i7 Dual Core with Hyper Threading (4 logical Processors) | 8 GB DDR3 1600MHz | USB3.0 5400rpm SATA | USB3.0 5400rpm SATA | USB3.0 5400rpm SATA | Samsung 512GB mSATA |

| Destination | Compression Enabled Y/N | # Backup Files | Backup Duration | Verification Duration | Total Duration |

| Drive 2 | N | 1 | 1 minute 35 seconds | 1 min 28 seconds | 3 min 3 seconds |

| Drive 2 | Y | 1 | 1 minute 31 seconds | 23 seconds | 1 min 54 seconds |

| Drives 2 + 3 | Y | 2 | 1 minute 29 seconds | 13 seconds | 1 min 42 seconds |

| SSD | Y | 4 | 1 minute 25 seconds | 6 seconds | 1 min 31 seconds |

| Destination | Compression Enabled Y/N | # Backup Files | Backup Duration | Verification Duration | Total Duration |

| Drive 2 | N | 1 | 1 min 18 seconds | 1 min 13 seconds | 2 min 31 seconds |

| Drives 1 + 2 + 3 | N | 3 | 25 seconds | 25 seconds | 50 seconds |

| Drive 2 | Y | 1 | 21 seconds | 22 seconds | 43 seconds |

| Drives 1 + 2 + 3 | Y | 3 | 23 seconds | 9 seconds | 32 seconds |

- You should never backup to the same physical disks that your data sits on.

- You should always verify that your backup files are valid, at the end of each backup.

- If you have an edition of SQL Server that supports compression, you should use it - it will help reduce the backup file verification time considerably.

- If you do not have compression just using multiple backup files may greatly reduce backup and backup file verification times.

- For very large databases the marginal gains of combining backup compression with multiple backup files will likely be noticeable.