As a SQL Server DBA, you may be working in environment that is monitored by System Center Operations Manager. If so, you’ll probably spend a lot of time in building a monitoring platform and configuring it to ensure that the DBA team get woken up when things start (or about to start) going wrong.

Within the Managed Support team at Coeo, SCOM is one of the tools we use to monitor all of our customer environments, so the DBA team rely on accurate alerting. In other words, when something goes wrong (or ideally before it goes wrong) we need to be alerted quickly. Conversely, if nothing is going wrong we don’t want to unnecessarily get woken up – a tired DBA team is not an effective DBA team.

However, the reality is that, a monitoring system is something that you'll continually evolve and tweak; and even attempt to perfect - but sometimes, it just goes wrong.

I hope that this will be the first in a series of posts that touch upon monitoring SQL Server and the tools that we use to monitor our environments. The aim of these tools is to help us react to problems before they become issues and ultimately reduce unscheduled downtime (i.e. loss of revenue). However, another important purpose of the tools is to serve us DBAs so that we can focus on the important things at the important times.

The problem

In this post I want to talk about what happens when monitoring goes wrong, with a specific view on problem we recently experienced. If you’re managing SQL Server environments with SCOM then you may possibly face this issue at some point. Even if you don’t manage your environment with SCOM, there may be some interest in the observed SQL Server behaviours that, at least I, hadn’t come across before.

The Coeo DBA team recently received an out-of-hours emergency alert indicating that one of our customer’s mission critical databases was full. This in itself was quite strange in that we would have expected to receive warning and critical alerts when the database reached 80% and 90% full respectively. In this instance, the on-call DBA logged onto the environment and on investigation found that impacted database was healthy and had more than 300 GB of available space (taking into account free space on the disk, into which the database could grow).

Some fifteen minutes later, another emergency alert was received for exactly the same issue, on the same database. And some 10 minutes thereafter, again. In both cases the database was healthy and hadn't suddenly grown by 300 GB (that would have made for a different, but equally interesting blog post).

Now, if you’ve ever been responsible for supporting a 24*7 environment, you’ll know that there’s nothing like a series of alerts in the early hours of the morning that will focus the mind in trying to prevent this from ever happening in future. Even more so if the alerts that are keeping you awake are false alerts, as appeared to be the case in this instance.

Investigating the Problem

In the first instance, it was clear that SCOM was incorrectly reporting the size of the database. It was therefore important to identify how SCOM was getting its information.

Now, in this particular case, the SCOM monitor that resulted in the alert being raised was the “DB Total Space” monitor, which targets a SQL Server 2012 DBEngine. This monitor lives in the “Microsoft.SQLServer.2012.Monitoring” management pack so I opened an (xml-exported) copy of this management pack and started searching.

This quickly led to me identifying the script “GetSQLDBFreeSpace.vbs”, within which there’s a function called GetAllDBFreeSpace that calls the following T-SQL script:

SELECT size / 128.0 as fileSize,

FILEPROPERTY(name, 'SpaceUsed') / 128.0 as fileUsed,

CASE WHEN max_size = -1 OR max_size = 268435456 THEN -1 ELSE max_size / 128 END as fileMaxSize,

CASE WHEN growth = 0 THEN 0 ELSE 1 END as IsAutoGrow,

is_percent_growth as isPercentGrowth,

growth as fileGrowth,

physical_name

FROM sys.master_files WITH (NOLOCK)

WHERE type = 0 AND is_read_only = 0

AND database_id = db_id()

So this is great as we now know what SCOM is running against the SQL Server instance in order to determine the size of the database.

The next step was to run the above query on the target database and, as expected, it returned a list of files and their corresponding file sizes. Everything seemed normal and this was reflected in that fact that, at this moment in time, SCOM was correctly reporting the size of the database.

The Curious Case of the Phantom Data File

On further investigation I noted that, at around the same time we received the alerts, a number of data files had been deleted from the database as part of a partition sliding window operation that was scheduled for that time.

This got me to thinking about whether it was possible that the data file modifications that were occurring contributed to the false alerts. So, with this in mind, I set up a database on my test environment with a couple of data files. I won’t include the scripts to create the test database but you can simply try this yourself by creating a new database with 2 data files. Make sure the database is in the full recovery model. I next ran the above query and everything looked as I would expect.

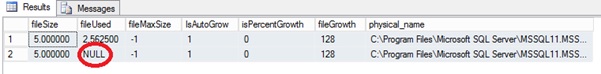

I then deleted one of the data files and re-ran the above query. Here’s the output

As you can see, one of the data files returned a fileUsed value of NULL.

We’ve clearly deleted that data file. However, the above query is returning some data for the file in question, but the FILEPROPERTY function returns a NULL.

So I next pulled the data straight from sys.master_files for this database:

select * from sys.master_files where database_id = db_id()

If you’re running through this same test, you’ll see that the file we just deleted is still listed in sys.master_files and has a state_desc value of “offline”. Incidentally, if you were to look in sys.database_files you’d see the same thing.

So the next question on my mind was, if we’ve just deleted the file, then why is the corresponding row in sys.database_files and sys.master_files; and when do these rows get removed?

At this point I thought to attempt what would happen if I tried to create a file with the same name as the one I had just deleted. Technically it should be possible because I’ve deleted the file, but then the data in the DMVs would suggest that it should not be possible. Here’s the resulting error message when I tried to create a file with the same name:

File 'NewFile' cannot be reused until after the next BACKUP LOG operation.

So that clears that question up. I may have deleted the file but as far as SQL Server is concerned, I need to run a log backup if I want to reuse the file name. Subsequent to running a log backup I found that the records were removed from sys.database_files and sys.master_files. Note that if your database is not in full recovery model then the rows don’t appear to persist in these DMVs.

Note that the SQL Server documentation at http://technet.microsoft.com/en-us/library/ms191433.aspx does make it clear that we should always backup a database immediately after adding or deleting files. However, what’s interesting is that there’s no mention of the importance of running a log backup.

SQL Server DBAs

If you’re responsible for managing a SQL Server environment, remember to always perform a log backup after adding or deleting data files to/from your databases.

As DBAs, our effectiveness has a very close relationship with the effectiveness of our monitoring systems. We can’t just be responsible for our SQL Server environments but we need to also pay close attention to our monitoring tools. In upcoming posts I hope to further explore subjects relating to monitoring SQL Server.

SCOM Administrators

If you’re responsible for managing SCOM environments where SQL Server is actively monitored and the DBA wants to know why he/she got woken up at 2am for a false “database is full” alert; ask them whether any data file addition or deletion operations were occurring at the time, and whether a log backup was subsequently taken.