Ready to create an enterprise Power BI solution with no data warehouse? Read on.

I know you have many business intelligence options already, but strap in, here comes another. What are dataflows?

- Self-service data preparation

- Power Query in your browser

- A single version of the truth

- Massive scale

- A common schema

That all sounds great, but where do you start?

- Power BI service

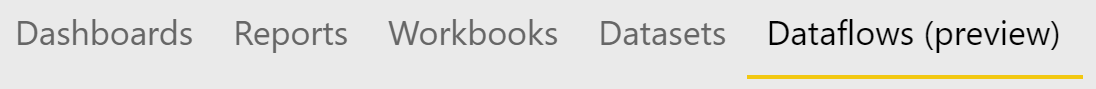

- In your workspace, alongside the familiar dashboards, reports and datasets, you will see dataflows

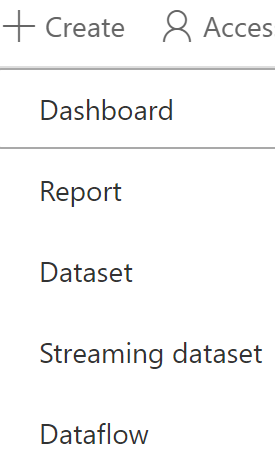

- Click “+ Create” and choose dataflow

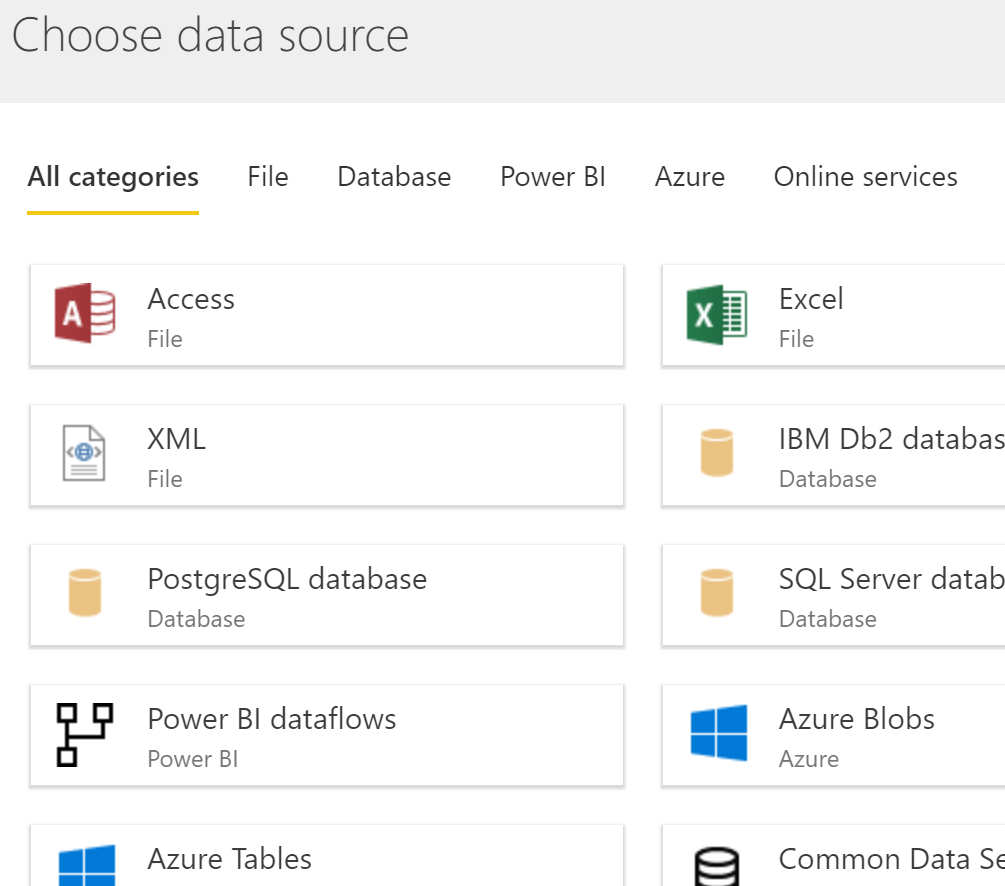

- Connect to a data source and use Power Query in your browser to transform data

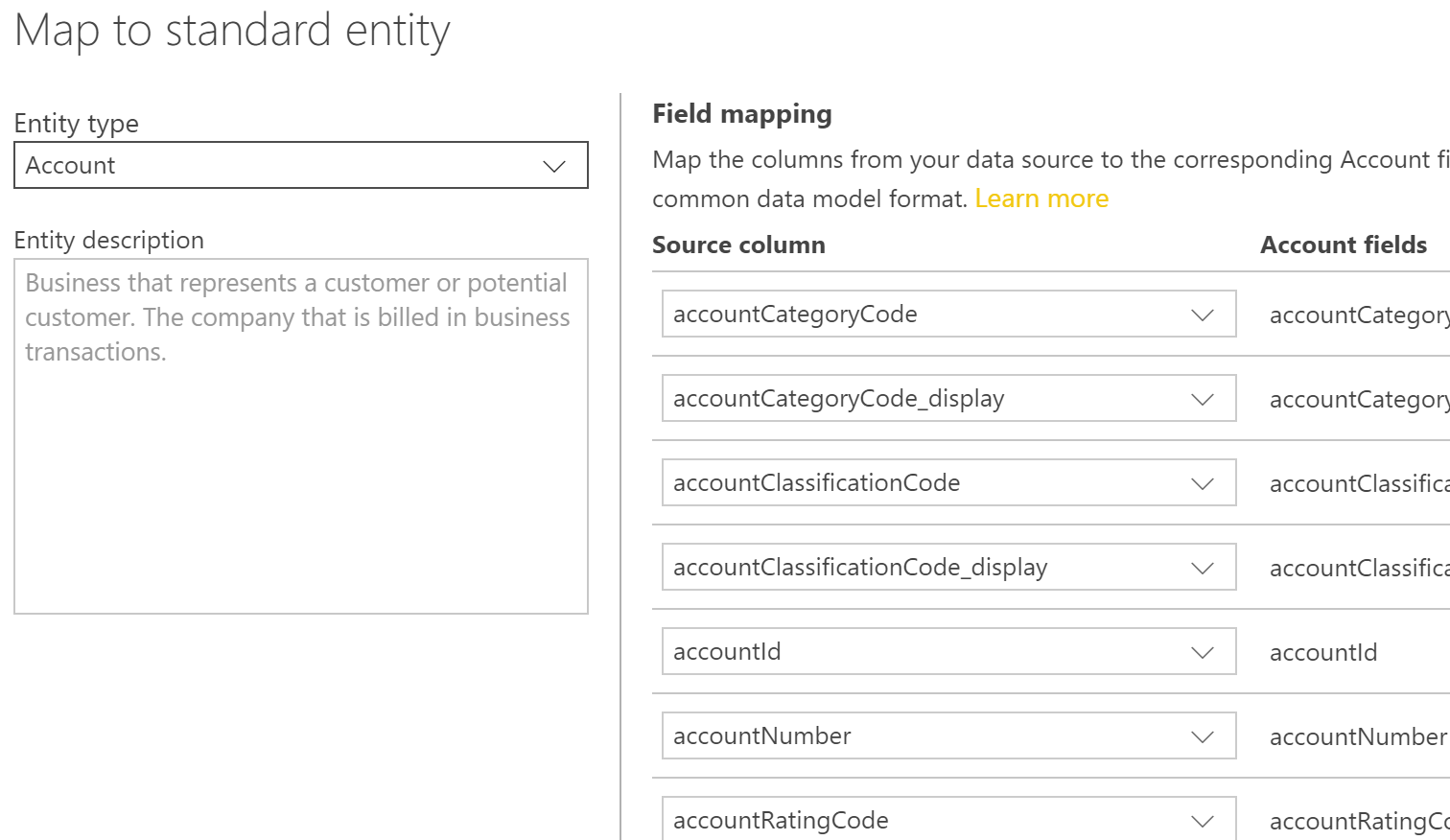

- Click “Map to standard” to map your data to the common data model

- Save your dataflow and specify a refresh schedule

- Open Power BI desktop, specify the dataflow as your source

- Publish to the Power BI cloud service

- You have both the dataflow and a familiar dataset

- The cleansed curated dataflow was defined once and is now available to everyone within your company

This single version of the truth sounds like a data warehouse and Azure Analysis Services that you might already be using. Why should you rip up your existing architecture and start using dataflows?

- Self-service data preparation – making Extract, Transform and Load logic accessible to non-IT people using the intuitive Power Query interface.

- Browser-based - dataflows introduces Power Query directly within your browser, an exciting development. Maybe the Power BI desktop will disappear one day, and you will do all development in your browser?

- Inheritance – dataflows can reference each other. Refresh one, and all the cascading updates are handled for you automatically in a consistent manner (Power BI premium required).

- Scale – unlike conventional Power BI datasets, dataflows are stored in Azure Data Lake storage Gen2 (https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-introduction). This integration is handled automatically for you offering unlimited scale. You can alternatively use your own Azure Data Lake Storage Gen2 account and expose the data to other services such as Databricks.

- Common Data Model – use an industry standard data model. After your data is imported and transformed, you can optionally map it to the common data model (CDM) (https://docs.microsoft.com/en-us/common-data-model/). CDM is an industry standard schema for entities such as Account, Address and Order. Once you map data to the CDM, other apps understand it such as PowerApps, Dynamics 365 and Power BI. You’re future-proofing your data model, who knows what services and applications will be offered in the future that can interpret your CDM data with little further effort required?

That was the case for dataflows, in the interest of fairness, let's look at the negatives:

- Duplicate measures – dataflows still require a dataset. Users consuming that dataflow into different datasets must duplicate DAX measures. An alternative architecture using Azure Analysis Services is a true single version containing all DAX measures. Azure Analysis Services cannot use a dataflow as a data source.

- Row-level security – like duplicating DAX measures, you need to repeat row level security in each dataset, you cannot add row level security to dataflows. Bear in mind when designing your architecture that self-service report creators with edit workspace permissions bypass Power BI row level security. Again, Azure Analysis Services works great as an alternative here. Row-level security defined once in Analysis Services applies to all users regardless of Power BI permissions.

- Chained refreshes – your architecture now includes a dataflow and dataset. You must refresh both entities in the correct order for Power BI users to visualise the latest data. There is no orchestration method to schedule the dataset after the dataflow. There is nothing new here, you have always refreshed a data warehouse and semantic model independently, but worth bearing in mind.

- Slowly changing dimensions – a data warehouse contains slowly changing dimensions, a key business intelligence concept to ensure an audit trail. Implementing slowly changing dimensions are very difficult if not impossible using dataflows.

- Source code control – unlike Azure Analysis Services using Visual Studio, there is no support for source code control using dataflows. Managing change is a manual process with no continuous integration. Testing and reliably releasing change is even more important using stacked dataflows that consume each other.

- Direct query – you are duplicating data in the dataflow and dataset. There is no option for direct query (leaving data at source), or live connection (deploying the data model to Analysis Services).

- Feature parity – Power Query in the browser is not as feature rich as the Excel, Power BI and Analysis Services versions (yet)

The Azure platform is your number one choice for Microsoft applications - you a future-proofing your architecture. Upgrades, patching, new features and security updates are applied on your behalf automatically with no user action.

The common data model introduces the concept of future-proofing your data in addition to the infrastructure. If you adhere to the consistent common data model contract, you can take advantage of tools and applications that can interpret and enrich your data with little development effort, a truly exciting prospect.