Update - April 2018:

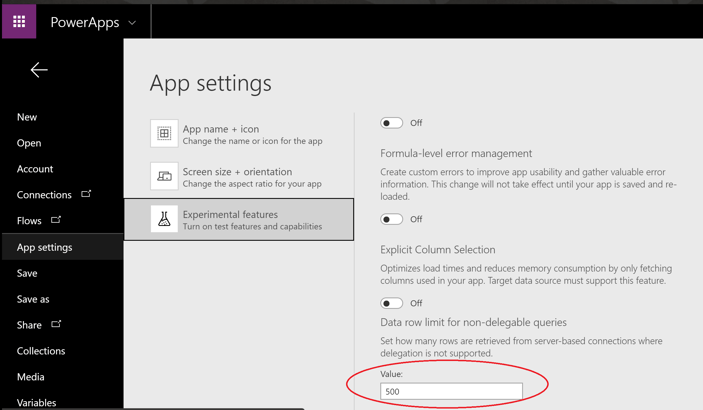

PowerApps now has an experimental feature that can be turned on to increase the 500 row limit. I've not tested it with large sets but its a nice step forward.

To increase the limit, in the app developer studio (web or desktop) go to File->App Settings->Experimental Features and scroll to the bottom of the list. There you will see a box that allows you to enter a number to set the limit higher for your app

With the recent update to Power BI which enables developers to embed PowerApps directly into reports, I feel the adoption of PowerApps will accelerate greatly when people start to realise the potential of this union of technologies.

I’ve been fortunate enough to be able to work with PowerApps on a large enterprise scale project and I feel the biggest consideration that needs to be taken into account is a design decision made by the PowerApps team called delegation, and the 500 record limit that is associated with it.

What is Delegation?

You see, PowerApps is mainly aimed at mobile devices such as phones and tablets. As these devices generally are not as powerful or have as much memory as laptops and desktops, delegation was introduced as a mechanism for reducing the processing done on device.

The basic principle is to push (delegate) as much processing down onto the data layer as is possible, and have the data layer do the heavy lifting and return the results. In theory this is a great idea, however not all data sources support delegation and, at the time if writing, only a small subset of functions support delegation.

Again at the time of writing you can do simple things such as basic sorting, filtering and searching for records (however there are a lot of ways this falls down too).

Other basic functions, such as simple aggregations (count, sum, average etc) do not currently support delegation, although I’m pretty sure this won’t be like that forever.

For a full list of functions and data sources that support delegation see the link below

https://powerapps.microsoft.com/en-us/tutorials/delegation-overview/

The 500 Record Limit

Ok so, if either the data source, or any of the functions used in a formula (as it is quite common to nest functions) don’t support delegation, then PowerApps attempts to pull all the data from the referenced data source down locally. However, due to PowerApps being targeted at mobile, it will only ever get the first 500 records.

So lets say for example your data source is a SQL Server table, which does support delegation, and this table contains 10,000 records, if you use a function as part of a formula that doesn’t support delegation you will only ever get the first 500 records and it does this silently.

Now you may get a little blue dot on your formula telling you that this code doesn’t support delegation, but from my experience this is not always the case. This can lead to situations of a developer (i.e. you) chasing your tail when data doesn’t reconcile between the source and the app.

The Workaround

To work around this, I devised a way to use something called Collections within PowerApps. Collections are essentially variant type arrays, for those of you with a programming background. For those not fortunate(?) enough to have that background, collections are just a table where columns that can store anything from text to pictures and even other collections.

The key to this is two fold, firstly I discovered that the 500 record limit does not apply to collections, i.e. you can store as many records in a collection as you like.

Secondly I had initially thought that the 500 record limit was imposed in each function that didn’t support delegation, however it turns out that’s incorrect, which means, once the data is loaded into a collection, regardless of the number of records, all functions work across all data in the collection. Great right?

So all we need to do is get the data into a collection and we can use that instead of the data source in the app.

It does mean anywhere you would update the data you need to update the collection and the base data source so that they stay in synch, which does present some issues itself, but these are achievable too.

This approach relies on your data source having a numeric unique ID on each record, it doesn’t have to be totally contiguous, gaps in the ID may result in the code needing to do extra iterations but it wont cause any errors.

You will also need a numbers table, which is literally just a table with a single column with the numbers 1 to say 10,000 in it. As my source is SQL Server, I used a numbers table there but you could quite easily create another collection to do this for you, rather than a SQL Server table.

Below is a copy of the code used to import the data from a table in SQL Server into a collection, however I’m reasonably sure it should work with any data source as long as it can have a unique numeric ID for each record.

This code also updates a couple of context variables, which are displayed on the screen to the user to show the progress of the data loading into the collection. This is optional so feel free to remove it.

UpdateContext({cxtCurrentlyLoading: "MyBigTable1"});

ClearCollect(colProgress,{PC: 0});

UpdateContext({ctxMinRecID: First(SortByColumns('[dbo].[MyBigTable1]', "MyBigTable1ID", Ascending)).MyBigTable1ID});

UpdateContext({ctxMaxRecID: First(SortByColumns('[dbo].[MyBigTable1]', "MyBigTable1ID", Descending)).MyBigTable1ID});

UpdateContext({ctxNumberOfPasses: RoundUp((ctxMaxRecID - ctxMinRecID) / 500,0)});

ClearCollect(colNumbersTable, Filter('[dbo].[NumbersTable]', Number <= ctxNumberOfPasses));

Clear(colMyBigTable1DataRaw);

ForAll(

colNumbersTable

,Collect

(

colMyBigTable1DataRaw,

Filter

(

'[dbo].[MyBigTable1]'

,(MyBigTable1ID - 1) > ctxMinRecID + ((Number - 1) * 500) && MyBigTable1ID <= ctxMinRecID + (Number * 500)

)

);

UpdateIf

(

colProgress

,true

,{PC: (100 / ctxNumberOfPasses) * Number}

)

);

Let's break this down into sections.

The first two lines are simply updating the progress text on screen, if you don’t want this these can be removed but make sure to remove them from the other sections of code too

UpdateContext({cxtCurrentlyLoading: "MyBigTable1"});

ClearCollect(colProgress,{PC: 0});

The next two lines get the minimum and maximum possible ID from the data source. This will give us the range of records we need to work with and works because SortByColumns supports delegation.

UpdateContext({ctxMinRecID: First(SortByColumns('[dbo].[MyBigTable1]', "MyBigTable1ID", Ascending)).MyBigTable1ID});

UpdateContext({ctxMaxRecID: First(SortByColumns('[dbo].[MyBigTable1]', "MyBigTable1ID", Descending)).MyBigTable1ID});

Next we work out how may potential lumps of 500 we have by getting the difference between the min and max ID’s. This tells us how many times we need to go around the loop.

Once we know how many iterations we need, we can create a collection from our numbers table (see above), ensuring the collection only contains records equal to the required number of iterations.

UpdateContext({ctxNumberOfPasses: RoundUp((ctxMaxRecID - ctxMinRecID) / 500,0)});

ClearCollect(colNumbersTable, Filter('[dbo].[NumbersTable]', Number <= ctxNumberOfPasses));

Finally we call a clear on the main collection we will be loading into, to ensure it doesn’t have any residual data from a previous load and then iterate over the data source, once for each record in our numbers collection as defined in our previous step, loading data into the collection that will ultimately be used in the rest of the application. We also update the progress counter to display the currently loaded percentage.

In each iteration we ensure we only request 500 records to stay within the limit. This is done by using the Filter function, which supports delegation when used for simple filtering. The reason we have to stick to 500 is because the Collect function, used to populate the collection, doesn’t support delegation.

I then use the min ID recorded at the start of the process and multiply by 500 the current number from the numbers table for the given iteration, this gives me perfect 500 record chunks between the min and max record ID

Clear(colMyBigTable1DataRaw);

ForAll(

colNumbersTable

,Collect

(

colMyBigTable1DataRaw,

Filter

(

'[dbo].[MyBigTable1]'

,(MyBigTable1ID - 1) > ctxMinRecID + ((Number - 1) * 500) && MyBigTable1ID <= ctxMinRecID + (Number * 500)

)

);

UpdateIf

(

colProgress

,true

,{PC: (100 / ctxNumberOfPasses) * Number}

)

);

To finish up, I just want to caveat that I know this isn’t the prettiest solution going, but it does work well and under testing scales reasonably well into the 60,000 to 70,000 record mark, where a noticeable slowdown can be observed during the later iterations of the ForAll.

Anyway, I hope this has helped you both understand this feature and also how to work around it when needed. I will look to do another post on handling updates when using this method.

PowerApps is a great platform and is growing all the time, it has massive potential and now its been so closely coupled with Power BI the possibilities are really exciting!

Need Some More Assistance?

If there’s anything not covered in here, you’d like clarification on anything or you feel Coeo could help you with your PowerApps development needs please feel free to comment here or  and I'll be glad to assist.

and I'll be glad to assist.